Hanyang University

College of Engineering

NewsFaculty

NewsFaculty

Faculty

| AI Technology that Understand Silent Speech Is Developed | |

|---|---|

|

작성자 : 한양대학교 공과대학(help@hanyang.ac.kr) 작성일 : 23.06.29 조회수 : 116 URL : |

|

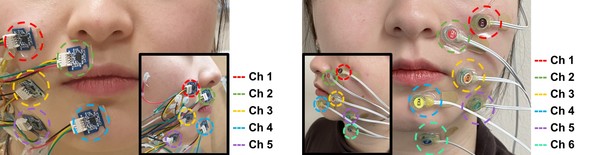

|

Expect great communication assistance for patients with vocal cord dysfunction

Professor Im Chang-hwan Hanyang University announced on the April 24 that Professor Im Chang-hwan's research team of the Department of Biomedical Engineering has developed the world's first silent speech interface using a three-axis accelerometer. Silent speech interfaces refer to a technology that recognizes words by analyzing the movement of mouth without making any sound. In the future, it is expected to help patients with vocal cord disorders communicate. Compared to voice recognition technology widely used in AI speakers, silent speech interfaces are still in the early stages of development. The most straightforward method of silent speech interface is to use a camera to capture changes in the shape of the mouth. However, it can only be used in environments where the entire face is visible. Other methods have been developed that involve attaching sensors to articulatory organs such as tongues, lips, and jaw, but they are bulky and can cause inconvenience in a user's daily life. Another method involves measuring electromyography or skin deformation of facial muscles during speech. However, this method requires sensors to be constantly to be attached to the skin, which is inconvenient. Besides, it has weak sensor durability and low accuracy. Professor Im's team proposed a new method of recognizing speech intent by attaching a three-axis accelerometer to the mouth and using the acceleration signal measured when making silent speech. The team used four accelerometer sensors to measure the movement of the muscles around the mouth when silently speaking 40 commonly used words in daily life. Professor Im's team succeeded in classifying words with high accuracy of 95.58% by proposing a new deep learning structure that combines convolutional neural networks (CNN) and long short-term memory (LSTM) neural networks to recognize silent speech words. In comparison, the accuracy was 89.68% when using six electromyography sensors to classify the same words. Regarding the technology, Professor Im said, "This technology could be used as a new method of communication for people with vocal cord disorders who have difficulty in speaking.”He said, " We are developing wireless sensors that are smaller than a baby's fingernail for practical application." The research team is also conducting research on synthesizing speech, in which they have already produced visible results and are preparing a follow-up paper.

This study, supported by the AI Graduate School Support Project of the Institute of Information & Communications Technology Planning & Evaluation, and the Alchemist Project of the Ministry of Trade, Industry, and Energy, were published in the April issue of "Engineering Applications of Artificial Intelligence." In addition, the technology was recently registered after applying for a U.S. patent in September 2020.

Silent speech interface system using a three-axis accelerometer (left) and Silent speech interface system using a conventional electromyograph (right) |

|

| 이전글 | Social Network Analysis that Flexibly Responds to Changes in Data Distribution |

| 다음글 | Developing Display Semiconductor Devices with Lower Cost and Power Consumption |

|

|